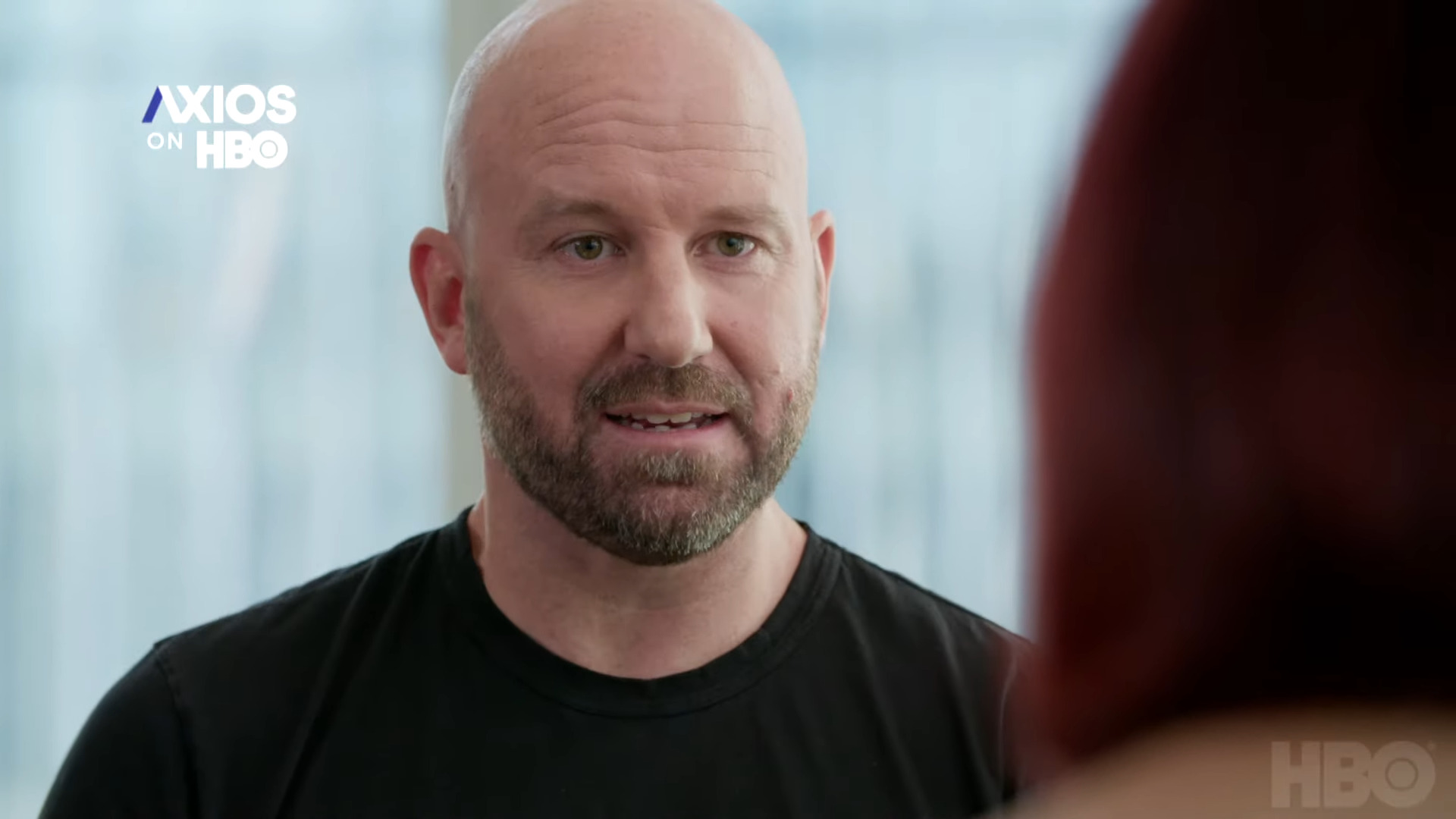

US media website Axios interviewed Andrew Bosworth, Facebook’s chief technology officer, about fake news, and the platform’s role in its spread. His conclusion and rhetoric show that Facebook still has no self-doubt.

« Even if we were spending all of our money on prevention, that wouldn’t stop people from seeing things they didn’t like. This will not eliminate all possibilities that people will have to use the platform in a malicious manner. The sentence comes fromAndrew Bosworth, Facebook’s vice president of virtual reality, who will take over as chief technology officer at Meta, Facebook’s parent company, in January.

the future Chief Technology Officer From the social network she gave an interview to Ina Fried, Journalist at Axios, who asked him what Facebook could do more to reduce it.” Negative effects ». And the future president’s response to all platform technologies is bewildering.

« These are the individuals who choose or not to believe in the posts they see on the platform, they are the ones who choose to share certain content or not, and I would never allow myself to say they have no right to do so because I don’t agree with what they are saying Indeed, it is Facebook users who share fake news, and it is their origin to create them. But denying the platform and its algorithm responsibility for spreading false news is at best false, and at worst is dangerous.

« I am proud of the tools we built.”

The Andrew Bosworth interview occurs as Facebook has been under fire for several months, especially after the Facebook profiles were posted. the a leak A large number of documents prepared by whistleblower company France Haugen have allowed many media outlets to expose certain practices of the social network. Besides the fact that platform moderation wouldn’t apply in the same way to everyone, or that the social network would have preferred more divisive content, we also find among these discoveries more serious facts: Facebook would have helped spread fake news on Covid, and the platform wasn’t To put in place sufficient moderation tools to enable effective combating of violent messaging in all languages.

However, Andrew Bosworth never mentioned these issues during his interview – even as he discussed the abundance of fake news and Facebook’s role in spreading it. Instead, the future tech chief blamed users of the social network. ” These are the individuals who choose whether or not to believe the posts they see on the platform ‘Did he say,’ They are the ones who choose to share certain content or not, and I will not allow myself to say that they would not have the right to do so because I do not agree with what they are saying. »

« If your democracy does not tolerate the rhetoric of some people, I am not sure what kind of democracy that is Andrew Bosworth followed up when a reporter asked him what he thought of the danger of fake news to democracy. ” I understand that these people’s rhetoric can be dangerous, and I really understand that, but we are basically a democratic technology, and I think we can provide more information to users, let everyone communicate with others, and this opportunity should not be given only to a small group to an elite. I’m proud of the tools we built ».

Blaming users seems to be a relatively poor answer compared to the scale of nuances to consider, the strength of algorithmic biases we know about, as well as their impact on real life. It is also impossible to ignore the platform’s responsibility with a wave of the hand, as it has become clear (to Facebook itself) that technology is not “neutral” and that companies make their decisions.

The insufficient number of brokers in some languages, and the poor performance of the report buttons in others, shows that Facebook has chosen not to invest enough in security.

« Our ability to tell what is misinformation is in itself questionable, so I’m really not saying we have enough knowledge and legitimacy to decide what another human should say and shouldn’t say, and who should listen “,” concludes the future chief technology officer, contrary to the principle of tech platform rules, which day after day makes choices of moderation according to its own rules of use or not.

“Certified gamer. Problem solver. Internet enthusiast. Twitter scholar. Infuriatingly humble alcohol geek. Tv guru.”