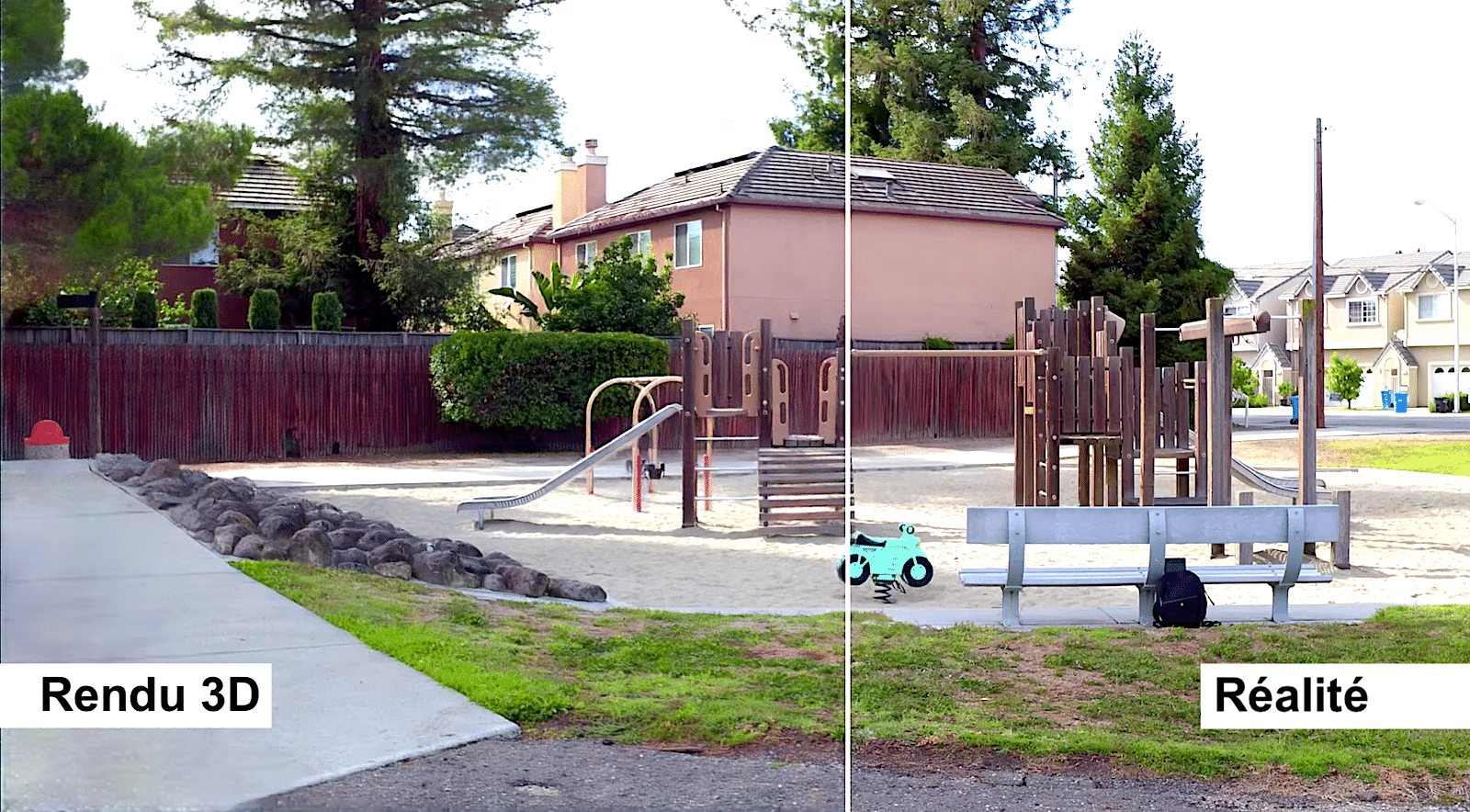

German researchers have developed an artificial intelligence capable of automatically creating 3D virtual environments from a few (2D) images. By simplifying the creation of realistic 3D spaces, the potential applications are diverse, ranging from virtual reality to research, including cinema.

To create a 3D scene, the researchers just need to feed their AI with some images. Their neural network, which is able to accurately visualize and understand the appearance and operation of light of any 2D rendering, provides all the necessary information for the 3D creation and rendering stages, which are performed using the program. Colmap.

Here the most experienced of you will say to yourself: “But similar algorithms have already been invented!”. Yes, certainly similar, but no more … In fact, this neural network is very different from previous systems: it is able to extract real physical properties from still images! ” We can change the position of the camera and thus get a new view of the object ”, explains Darius Ruckert, who led the study, from the University of Erlangen-Nuremberg (Germany).

An explorable 3D world of two images…

To sum up the capabilities of the system, the researchers suggest that an explorable 3D world could be created from just two images (although it wouldn’t be very detailed in this case). ” The more photos you have, the better the quality , explains Rückert. ” The model can’t create things they haven’t seen ».

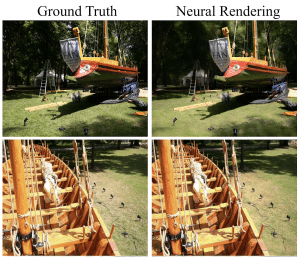

Some of the more detailed examples of created environments use between 300 and 350 images taken from different angles. But Rückert hopes to improve the system by having it simulate how light reflects off objects in a scene to reach the camera, which means fewer still shots will be needed to render in 3D resolution.

« Each point is projected into space and its neural “descriptor” is combined into a polycyclic neural image. This image is processed by a deep convolutional neural network to generate an HDR image of the scene. » Explain to researchers in their document. From this, the convolutional neural network converts the HDR (High Dynamic Range) image into LDR (Low Dynamic Range). It is these optimized self-generated images that are then used to interpret the environment, in order to create a 3D space by merging and traversing the data.

Researchers can train the end-to-end neural network using images, point clouds, or camera settings as inputs. ” Since all the steps are differentiable, the parameters to be optimized can be freely chosen, for example camera mode, camera model or texture color. ‘, they add.

Some concepts: high dynamic range imaging (HDRI) systems are grouped together allowing the capture and/or display of an image (still or moving) of a scene with very varied levels of brightness. get a picture HDR It can also be done with a conventional camera by taking several photos LDR (low dynamic range) and then integrated with the software.

“Unparalleled display quality”

« So far, photo-realistic creation from 3D reconstructions has not been fully automated and still has notable flaws. “,” explains Tim Field, founder of New York-based Abound Labs, which is working on 3D capture software.

Although Field notes that the system still requires accurate 3D data entry and is not yet functional for moving objects, “the display quality is unparalleled,” he says. ” It’s proof that mechanistic realism is possible “.Field believes this technology can be used to create cinematic visual effects and to provide tours of virtual reality locations.” This will speed up the already very dynamic search field for machine learning-based rendering of computer-generated images “Finally.

A video explaining the project and providing 3D renderings, posted by the researchers:

Source : arXiv

“Certified gamer. Problem solver. Internet enthusiast. Twitter scholar. Infuriatingly humble alcohol geek. Tv guru.”