Among the most promising applications of quantum computing, quantum machine learning is set to form waves. But how this could be achieved is still a bit of a mystery.

IBM researchers now claim to have mathematically proven it With a quantum approach, some machine learning problems can be solved faster than conventional computers.

Machine learning is a well-established branch of artificial intelligence, and it is already used in many industries to solve different problems. This involves training an algorithm with large data sets, in order to allow the model to identify different patterns and ultimately calculate the best answer when new information is provided.

Quantum computing and machine learning

With larger data sets, a machine learning algorithm can be improved to provide more accurate answers, but this comes at a computational cost that quickly reaches the limits of traditional hardware. That’s why researchers hope that one day they will be able to harness the enormous computing power of quantum techniques to take machine learning models to the next level.

One method in particular, called “quantum nuclei,” is the subject of many research articles. In this approach, a quantum computer intervenes only for part of the global algorithm, by expanding the so-called characteristic space, that is, the set of properties used to characterize the data submitted to the model, such as ‘gender’ or ‘age’ if the system is trained to recognize patterns in people.

To put it simply, using a quantum nucleus approach, a quantum computer can distinguish between a larger number of features and thus identify patterns even in a huge database, whereas a classical computer would not see just random noise.

Classification problem

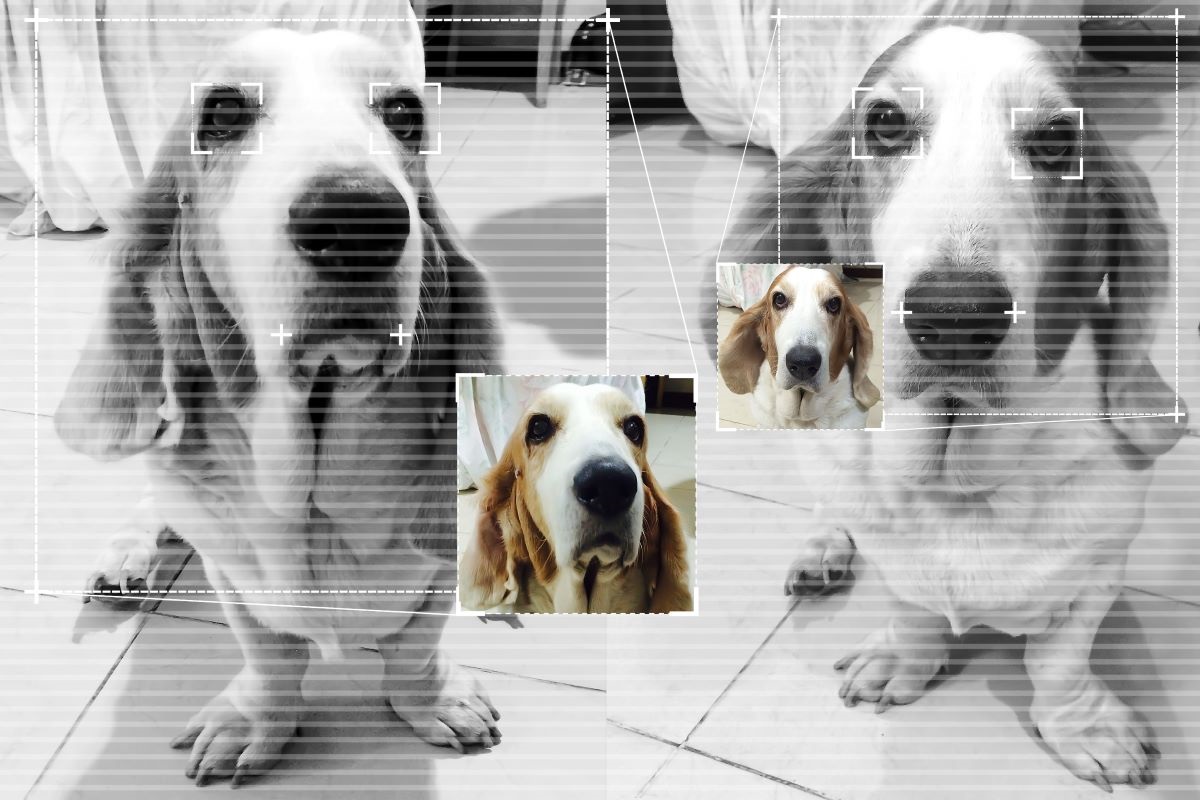

IBM researchers set out to use this approach to solve a specific type of machine learning problem called classification. As the IBM team explains, the most common example of a classification problem is a computer that receives pictures of dogs and cats and needs to be trained with this data set. The ultimate goal is to allow it to automatically tag all future images it receives whether it is a dog or a cat, with the goal of creating accurate tags in the least amount of time.

Big Blue scientists developed a new classification task and found that a quantum algorithm using the quantum kernel method was able to find relevant features in the data for accurate labeling, while for classical computers, the data set looked like random noise.

“The routine we are using is a general method that in principle can be applied to a wide range of problems,” Kristan Temme, a researcher at IBM Quantum, told ZDNet. “In our research paper, we formally demonstrated that a quantum kernel estimation routine can lead to learning algorithms that, for specific problems, go beyond classical machine learning approaches.”

End-to-end acceleration

To demonstrate the advantage of the quantum method over the classical approach, the researchers created a classification problem for which data could be generated on a classical computer, and showed that no classical algorithm could do better than a stochastic response to answer the problem.

However, when they visualized the data in a quantum feature map, the quantum algorithm was able to predict the labels very accurately and quickly.

The research team concludes, “This article can be considered an important step in the field of quantum machine learning, as it demonstrates a comprehensive acceleration of a quantum nucleus method implemented in a fault-tolerant manner with realistic assumptions.”

Limited use by current quantum devices

Of course, the classification task developed by scientists at IBM is specifically designed to determine whether the quantum nucleus method is useful, and is still far from ready to apply to any kind of large-scale business problem.

According to Kristan Temme, this is mainly due to the limited size of IBM’s current quantum computers, which so far can only support less than 100 qubits. There are far from the thousands, if not millions, of qubits that scientists believe are necessary to start creating value in the field of quantum technologies.

At this point, we can’t cite a specific use case and say “this will have a direct impact,” the researcher adds. “We have not yet realized the implementation of a ‘large’ quantum machine learning algorithm. The size of this algorithm is of course directly related to the development of quantum matter.”

The result is purely theoretical, but it opens the door to other research

IBM’s latest experiment also applies to a specific type of classification problem in machine learning, and it does not mean that all machine learning problems will benefit from the use of quantum cores.

But the results open the door to further research in this area, to see if other machine learning problems could benefit from using this method.

So much work is still up for debate at the moment, and the IBM team has recognized that any new discovery in this area has many caveats. But until quantum hardware improves, researchers are committed to continuing to prove the value of quantum algorithms, even if from a mathematical point of view.

Source : ZDNet.com

“Certified gamer. Problem solver. Internet enthusiast. Twitter scholar. Infuriatingly humble alcohol geek. Tv guru.”